Leveraging advanced artificial intelligence, Facebook has rolled out a new content moderation system that can detect and remove harmful content more efficiently. This system is designed to keep the platform safer by identifying and addressing offensive posts in real-time.

In an era where social media platforms are inundated with content, ensuring the safety and well-being of users has become paramount. Facebook's new AI-powered content moderation system is a significant leap forward in maintaining a secure online environment.

AI-powered content moderation is a sophisticated process that leverages advanced technologies to manage and filter content on social media platforms. Here’s an in-depth look at how this system operates within Facebook:

The foundation of Facebook's content moderation system lies in its advanced machine learning algorithms. These algorithms are meticulously trained on massive datasets comprising various types of content to recognize patterns and identify harmful material, including hate speech, violent imagery, and misinformation.

A key feature of Facebook's AI moderation system is its real-time detection capability. The system continuously scans and evaluates content as it is uploaded, allowing for immediate action.

One of the most challenging aspects of content moderation is understanding the context in which content is shared. Traditional moderation tools often fail to grasp the nuances and context, leading to incorrect flagging of benign content or missing harmful content.

In conclusion, AI-powered content moderation on Facebook involves the integration of advanced machine learning algorithms, real-time detection capabilities, and sophisticated contextual understanding through NLP. These elements work together to create a robust system that can effectively identify and mitigate harmful content, ensuring a safer and more respectful online environment. By continuously evolving and improving these technologies, Facebook aims to stay ahead of emerging threats and maintain the integrity of its platform.

AI-powered content moderation offers numerous advantages that significantly enhance the quality and safety of social media platforms like Facebook. Here are some of the key benefits:

The primary benefit of AI-powered content moderation is the enhanced safety it provides to users. By rapidly identifying and removing harmful content, the system helps create a more welcoming and secure environment.

Given the vast amount of content posted on Facebook daily, manual moderation is not feasible. AI-powered moderation offers a scalable solution, enabling Facebook to handle the massive volume of content efficiently.

AI systems are designed to learn and adapt over time, making them increasingly effective at content moderation.

In conclusion, AI-powered content moderation provides significant benefits, including enhanced safety, scalability, and continuous improvement. By leveraging these advanced systems, Facebook can maintain a secure, welcoming environment for its users, efficiently manage the vast volume of content posted daily, and adapt to emerging threats to ensure ongoing effectiveness in content moderation. This technological advancement not only improves user experience but also strengthens the platform’s integrity and trustworthiness.

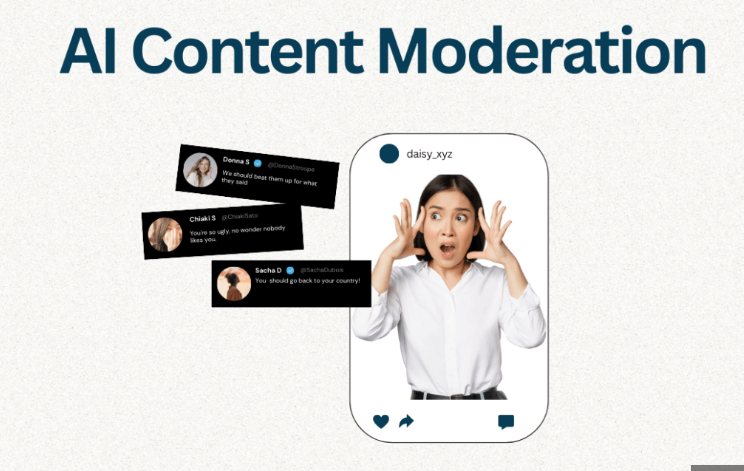

While Facebook's AI-powered content moderation system offers significant benefits, it also faces several challenges and limitations that need to be addressed.

One of the primary challenges of AI content moderation is the occurrence of false positives and false negatives.

The use of AI for content moderation raises significant privacy concerns among users.

Conclusion

Facebook's AI-powered content moderation system represents a significant advancement in creating a safer online environment. By leveraging advanced artificial intelligence, Facebook can detect and remove harmful content more efficiently, ensuring the platform remains a welcoming space for all users. Despite the challenges of false positives and negatives and the privacy concerns, the continuous improvement of AI algorithms and a balanced approach to privacy promise a robust and effective solution for content moderation in the digital age. As the system evolves, it will need to address these challenges proactively to maintain user trust and uphold the integrity of the platform.

By implementing these AI-driven strategies, Facebook not only enhances user safety but also sets a new standard for content moderation in the social media landscape. As AI technology continues to evolve, we can expect even greater advancements in maintaining the integrity and safety of online communities.

For more insights and updates on effective social media strategies, make sure to follow Accnice and our tutorial blog, where we share the latest and most effective content marketing tips.